内容纲要

概要描述

本文描述在truncate table失败日志报错cannot be deleted since [path] is snapshottable and already has snapshot的解决方案

详细描述

问题描述

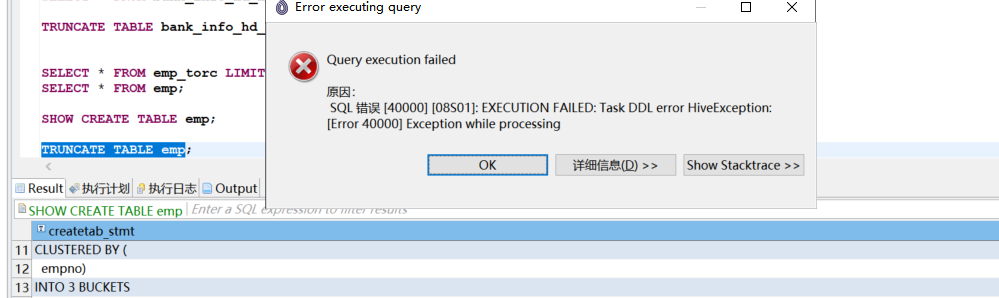

如果客户使用TBAK工具同步orc事务表的时候,可能会出现任务失败,snapshot残留的情况。snapshot残留会导致truncate表失败,语句执行当前报错如下:

SQL 错误 [40000] [08S01]: EXECUTION FAILED: Task DDL error HiveException: [Error 40000] Exception while processing

java.sql.SQLException: EXECUTION FAILED: Task DDL error HiveException: [Error 40000] Exception while processing

EXECUTION FAILED: Task DDL error HiveException: [Error 40000] Exception while processing

如果drop 表再重新create,不会报错,但是数据仍然是没有被清空的,其hdfs上数据目录还在。

inceptor server角色服务器上的/var/log/inceptorX/hive-server2.log 日志报错如下:

2021-11-29 13:39:20,504 ERROR exec.DDLTask: (DDLTask.java:execute(629)) [HiveServer2-Handler-Pool: Thread-84248(SessionHandle=389b6104-4d92-4372-b925-c7efb7f66cc9)] - org.apache.hadoop.hive.ql.metadata.HiveException: Exception while processing

at org.apache.hadoop.hive.ql.exec.DDLTask.truncateTable(DDLTask.java:5889)

at org.apache.hadoop.hive.ql.exec.DDLTask.execute(DDLTask.java:524)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:164)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:89)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2716)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:2433)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1764)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1599)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1566)

at io.transwarp.inceptor.server.InceptorSQLOperation.runInternal(InceptorSQLOperation.scala:66)

at org.apache.hive.service.cli.operation.Operation.run(Operation.java:295)

at org.apache.hive.service.cli.session.HiveSessionImpl.executeStatementInternal(HiveSessionImpl.java:433)

at org.apache.hive.service.cli.session.HiveSessionImpl.executeStatementWithParamsAndPropertiesAsync(HiveSessionImpl.java:400)

at org.apache.hive.service.cli.CLIService.executeStatementWithParamsAndPropertiesAsync(CLIService.java:328)

at io.transwarp.inceptor.server.InceptorCLIService.executeStatementWithParamsAndPropertiesAsync(InceptorCLIService.scala:149)

at org.apache.hive.service.cli.thrift.ThriftCLIService.ExecuteStatement(ThriftCLIService.java:542)

at org.apache.hive.service.cli.thrift.TCLIService$Processor$ExecuteStatement.getResult(TCLIService.java:1857)

at org.apache.hive.service.cli.thrift.TCLIService$Processor$ExecuteStatement.getResult(TCLIService.java:1842)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39)

at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:56)

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:285)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.io.IOException: Failed to move to trash: hdfs://nameservice1/inceptor1/user/hive/warehouse/default.db/hive/emp

at org.apache.hadoop.fs.TrashPolicyDefault.moveToTrash(TrashPolicyDefault.java:164)

at org.apache.hadoop.fs.Trash.moveToTrash(Trash.java:114)

at org.apache.hadoop.fs.Trash.moveToAppropriateTrash(Trash.java:95)

at org.apache.hadoop.hive.shims.Hadoop23Shims.moveToAppropriateTrash(Hadoop23Shims.java:255)

at org.apache.hadoop.hive.ql.exec.DDLTask.truncateTable(DDLTask.java:5875)

... 24 more

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.protocol.SnapshotException): The directory /inceptor1/user/hive/warehouse/default.db/hive/emp cannot be deleted since /inceptor1/user/hive/warehouse/default.db/hive/emp is snapshottable and already has snapshots

at org.apache.hadoop.hdfs.server.namenode.FSDirSnapshotOp.checkSnapshot(FSDirSnapshotOp.java:224)

at org.apache.hadoop.hdfs.server.namenode.FSDirRenameOp.validateRenameSource(FSDirRenameOp.java:572)

at org.apache.hadoop.hdfs.server.namenode.FSDirRenameOp.unprotectedRenameTo(FSDirRenameOp.java:361)

at org.apache.hadoop.hdfs.server.namenode.FSDirRenameOp.renameTo(FSDirRenameOp.java:299)

at org.apache.hadoop.hdfs.server.namenode.FSDirRenameOp.renameToInt(FSDirRenameOp.java:244)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.renameTo(FSNamesystem.java:3729)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.rename2(NameNodeRpcServer.java:911)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.rename2(ClientNamenodeProtocolServerSideTranslatorPB.java:599)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:969)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2217)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2213)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1996)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2211)

解决方案

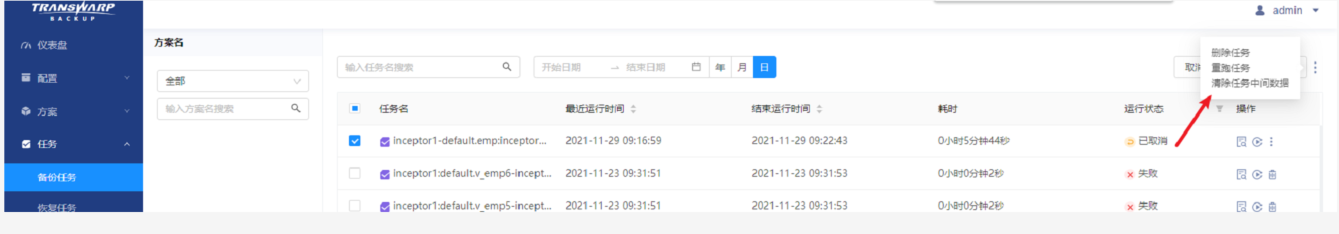

1. TBAK点击清理中间数据

2. hdfs上清理snapshot

查看hdfs上有多少目录可以被snapshottable(部分老版本无法使用该命令)可以用这个命令确认一下报错路径/inceptor1/user/hive/warehouse/default.db/hive/emp 是否在内

$ hdfs lsSnapshottableDir根据报错/inceptor1/user/hive/warehouse/default.db/hive/emp 路径,在hdfs上查看snapshot情况

$ hdfs dfs -ls /inceptor1/user/hive/warehouse/default.db/hive/emp/.snapshot

通过这个输出我们需要知道snapshot的创建需要两个参数,一个是路径/inceptor1/user/hive/warehouse/default.db/hive/emp,一个是snapshot的名字就是default-emp-20211129092141

删除snapshot

$ hdfs dfs -deleteSnapshot [snapshot的路径] [snapshot的名字]对于emp这个表,应该是

$ hdfs dfs -deleteSnapshot /inceptor1/user/hive/warehouse/default.db/hive/emp default-emp-20211129092141

然后再去truncate 表成功